Disclaimer: This malware sample is not in any way related to Hacking Team (as far as I know) other than me making some jokes about them related to a future presentation about their OS X malware product.

Two months ago (maybe three) I started noticing a sporadic redirect when I accessed these blog pages. It wasn’t anything “malicious” as far as I could evaluate; just a redirect to adult friend finder site. A friend did some initial research on the site pages and content and could not find anything relevant there, other than a very old Zen encoded backdoor (LOL!). I also poked around the database contents and it appeared clean. Having read about some recent Linux rootkits injecting iframes and that kind of stuff I was convinced the shared server had been hacked. Other tasks were calling for my attention and so this matter got sidetracked.

Last week some readers started complaining about the redirects and it was finally time to find out what was really happening. I asked my great hosting friends at HighSpeedWeb to give me r00t and find the problem. My instinct was right and I found out a new variant of Linux/CDorked.A that made headlines beginning of 2013. Unfortunately I have no idea how the breach started but I bet in a local root exploit (server was running KSplice). Shared servers are a pain to manage with all kinds of vulnerable scripts being installed. For some background on Linux/CDorked.A you should have a look at the following articles:

- Linux/Cdorked.A: New Apache backdoor being used in the wild to serve Blackhole

- ESET and Sucuri Uncover Linux/Cdorked.A: The Most Sophisticated Apache Backdoor

- Apache Binary Backdoors on Cpanel-based servers

- Malware.lu Technical Analysis of CDorked.A

- Ebury SSH Rootkit – Frequently Asked Questions (updated info, recommended!)

The two warning signs are a modified httpd binary linked against the open_tty symbol and a shared memory segment 6118512 bytes long. This server httpd binary had no signs of compromise and that specific shared memory segment did not exist. Running the ipcs command revealed a very suspicious shared memory segment, slightly bigger than the one mentioned in those articles and detection tools.

sh-4.1# ipcs -m

------ Shared Memory Segments --------

key shmid owner perms bytes nattch status

0x01006cab 447545344 root 600 1200712 8

0x00003164 447152129 nobody 600 7620272 5 <- the backdoor segment

0x00000a6a 13729803 root 666 3282312 0

0x000009e4 404193300 root 666 3179912 0

A CPanel support technician was also poking around the server and recompiled Apache. He declared victory because the redirect wasn’t happening anymore. Too soon my friend! The next day the redirect was still active and this time I was sure the httpd binary wasn’t modified – I stored the checksums so I could compare them. Ok, we have some mystery here. Something is definitely being injected into the httpd server but the binary is not modified in the filesystem. It could be either a kernel rootkit (Crowdstrike’s analysis of such rootkit here), an Apache module, or something else. Under these assumptions my first task was to gather a memory dump and analyse with Volatility. Nothing suspicious was found in the kernel side so I temporarly ruled out the kernel rootkit hypothesis. Apache has support for filters chains so this would be a good spot for injection and my next step. I did some brief tracing into this but couldn’t find anything. Keep in mind this was a live server with many hits so I needed to be careful to avoid downtime. Good old habits from managing the Portuguese ATM network.

Next hypothesis: assuming the httpd binary is ok at disk, is the binary in memory the same?

Time to memory dump httpd binary. Volatility had some problems finding the original binary using linux_find_file command. I could dump individual segments but that is a mess to analyse in IDA.

How the hell do you (easily) dump a full binary in Linux? I left Linux 5 years ago! Hum… let’s core dump httpd. The first time it worked ok and I got the core dump to load in IDA. Later I tried again and this time it failed.

sh-4.1# gcore 74765

core.JgKHE6:4: Error in sourced command file:

(deleted)/usr/local/apache/bin/httpd: No such file or directory.

gcore: failed to create core.74765

Now this is a good clue for what is happening here. It might explain some of the Volatility issues finding the httpd process (this is something I have to try later, if deleted binaries can fool Volatility analysis). Let’s take a look at procfs to see what’s happening:

sh-4.1# ls -la /proc/456404/

total 0

dr-xr-xr-x 6 root root 0 Feb 1 19:08 .

dr-xr-xr-x 358 root root 0 Oct 9 16:35 ..

-r-------- 1 root root 0 Feb 2 18:00 auxv

-r--r--r-- 1 root root 0 Feb 2 18:00 cgroup

--w------- 1 root root 0 Feb 2 18:00 clear_refs

-r--r--r-- 1 root root 0 Feb 1 19:08 cmdline

-rw-r--r-- 1 root root 0 Feb 2 18:00 coredump_filter

-r--r--r-- 1 root root 0 Feb 2 18:00 cpuset

lrwxrwxrwx 1 root root 0 Feb 2 18:00 cwd -> /

-r-------- 1 root root 0 Feb 2 18:00 environ

lrwxrwxrwx 1 root root 0 Feb 1 19:09 exe -> (deleted)/usr/local/apache/bin/httpd

dr-x------ 2 root root 0 Feb 2 04:09 fd

dr-x------ 2 root root 0 Feb 2 18:00 fdinfo

The original binary has been deleted! Definitely a good clue to understand why the httpd binary checksums are always ok. Let’s look at the memory map and verify the inode information:

sh-4.1# cat /proc/456404/maps

00400000-00539000 r-xp 00000000 fd:00 3277341 (deleted)/usr/local/apache/bin/httpd

00739000-00745000 rw-p 00139000 fd:00 3277341 (deleted)/usr/local/apache/bin/httpd

00745000-0074a000 rw-p 00000000 00:00 0

01a89000-04e26000 rw-p 00000000 00:00 0

04e26000-04e48000 rw-p 00000000 00:00 0

We can use debugfs to poke at that inode and recover the original binary! This link is a good reference on how to do this. The first time I tried this I could not find the original binary, it was overwritten by some other file. Meanwhile I had noticed some strange behavior by httpd:

sh-4.1# ps aux | grep htt

root 800497 9.7 0.2 129792 35792 ? Ss 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

root 800510 0.0 0.1 128528 32196 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800511 0.0 0.2 129704 32644 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800514 0.0 0.2 129932 33984 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800515 0.3 0.2 130056 35088 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800516 2.0 0.2 131812 35864 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800517 1.0 0.2 130236 35440 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800518 0.6 0.2 130464 34500 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 800532 0.5 0.2 130192 34236 ? S 10:08 0:00 /usr/local/apache/bin/httpd -k start -DSSL

root 800546 0.0 0.0 103232 784 pts/0 S+ 10:08 0:00 grep htt

sh-4.1# ps aux | grep httpd

root 817699 0.3 0.2 129828 35832 ? Ss 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

root 817713 0.0 0.1 128564 32188 ? S 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 817715 0.0 0.2 129876 32780 ? S 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 817864 0.0 0.2 137940 34880 ? S 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 817874 0.1 0.2 139968 36876 ? S 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 817877 0.1 0.2 140196 37068 ? S 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 817880 0.1 0.2 139320 36260 ? S 10:37 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818204 0.0 0.2 137812 34768 ? S 10:38 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818294 0.0 0.2 137812 34384 ? S 10:38 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818528 0.2 0.2 139292 36112 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818529 0.0 0.2 137404 34092 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818530 0.0 0.2 137548 34140 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818546 0.0 0.2 137812 34356 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818547 0.0 0.2 137412 34088 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818548 0.0 0.2 137808 34344 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

nobody 818549 0.0 0.2 137412 34000 ? S 10:39 0:00 /usr/local/apache/bin/httpd -k start -DSSL

root 818592 0.0 0.0 103236 792 pts/0 S+ 10:39 0:00 grep httpd

After I restarted Apache, minutes later it would be restarted again and this time the original binary would show up deleted. My hypothesis here was a cron job responsible for reloading the trojaned httpd binary. Verified all the cron jobs and nothing suspicious was found. I made an assumption that there was a twice an hour reload of the backdoor but this was wrong as I will explain later on. At this point I just needed to restart Apache, wait for the backdoor to be activated, and immediately recover the trojaned binary. Got the binary, load into IDA and compare with the Linux/CDorked.A sample. Bingo, it’s very similar. The functions names are not the same (hashes like names in known variant, single letter names on this one) but the code is very similar – have a look at ap_read_request Apache function, which is where the backdoor has its modified code to filter requests.

Next big question: how is the trojaned binary started and where it is in the filesystem? At this point I knew something is executed after the legit Apache is restarted, replaces it with a trojaned version and restores the original file.

What is the easiest way to detect this? Trace the process activity! I have some very cool tricks in OS X to do this (other than DTrace) but had no idea what was new in this area in Linux. A quick web search got me into this page. It has a small example of a process tracer using netlink proc events. I gave it a try and it was good enough for this purpose. Systemtap is another (and probably better) alternative but I was looking for a quick solution. You should definitely have both in your malware analysis toolset.

Now I could see a strange incoming SSH connection executing commands and after that the trojaned Apache was running. Modified the code sample to something that dumps the binary being executed and its command line parameters. Code sample available here. Other solutions such as ttysnoop and so on could have been used. I wanted to disrupt as less as possible the server.

This allowed me to easily find out what was happening. Here is a sample output of an older version of the modified tracer utility (not the whole chain of commands, just a few selected ones):

now: 2014-1-31 11:21:43

PID: 181941 uid: 0 gid: 0

181941 cmdline:/usr/sbin/sshd-R

exec: tid=181941 pid=181941 /usr/sbin/sshd

fork: parent tid=181941 pid=181941 -> child tid=181942 pid=181942

gid change: tid=181942 pid=181942 from 74 to 74

uid change: tid=181942 pid=181942 from 74 to 74

fork: parent tid=37151 pid=37151 -> child tid=181943 pid=181943

exit: tid=181943 pid=181943 exit_code=0

exit: tid=181942 pid=181942 exit_code=0

fork: parent tid=181941 pid=181941 -> child tid=181944 pid=181944

now: 2014-1-31 11:21:50

PID: 181944 uid: 0 gid: 0

181944 cmdline:bash-cecho GOOD

exec: tid=181944 pid=181944 /bin/bash

fork: parent tid=181944 pid=181944 -> child tid=181945 pid=181945

fork: parent tid=181945 pid=181945 -> child tid=181946 pid=181946

now: 2014-1-31 11:21:50

PID: 181946 uid: 0 gid: 0

181946 cmdline:whoami

exec: tid=181946 pid=181946 /usr/bin/whoami

exec: tid=181964 pid=181964 /bin/bash

fork: parent tid=181964 pid=181964 -> child tid=181965 pid=181965

now: 2014-1-31 11:21:51

PID: 181965 uid: 0 gid: 0

181965 cmdline:/usr/bin/md5sum/usr/local/apache/bin/httpd/usr/sbin/arpd

exec: tid=181965 pid=181965 /usr/bin/md5sum

now: 2014-1-31 11:21:53

PID: 181978 uid: 0 gid: 0

181978 cmdline:cp-a/usr/bin/s2p /tmp/X3Cm2rXtncgR7scn_m.tmp

exec: tid=181978 pid=181978 /bin/cp

now: 2014-1-31 11:21:53

PID: 181980 uid: 0 gid: 0

181980 cmdline:mv-f/tmp/X3Cm2rXtncgR7scn_m.tmp/usr/local/apache/bin/httpd

exec: tid=181980 pid=181980 /bin/mv

now: 2014-1-31 11:21:53

PID: 181981 uid: 0 gid: 0

181981 cmdline:/bin/sh/sbin/servicehttpdstop

exec: tid=181981 pid=181981 /bin/bash

now: 2014-1-31 11:22:0

PID: 182017 uid: 0 gid: 0

182017 cmdline:cp-a/usr/sbin/arpd /tmp/X3Cm2rXtncgR7scn_m.tmp

exec: tid=182017 pid=182017 /bin/cp

now: 2014-1-31 11:22:0

PID: 182018 uid: 0 gid: 0

182018 cmdline:mv-f/tmp/X3Cm2rXtncgR7scn_m.tmp/usr/local/apache/bin/httpd

exec: tid=182018 pid=182018 /bin/mv

now: 2014-1-31 11:22:0

PID: 182019 uid: 0 gid: 0

182019 cmdline:touch-r/usr/sbin/arpd /usr/local/apache/bin/httpd

exec: tid=182019 pid=182019 /bin/touch

now: 2014-1-31 11:22:0

PID: 182020 uid: 0 gid: 0

182020 cmdline:/usr/sbin/tunelp -r/usr/sbin/arpd /usr/local/apache/bin/httpd

exec: tid=182020 pid=182020 /usr/sbin/tunelp

An incoming sshd connection stops the original Apache, copies over the trojaned version, starts it again, and replaces again the trojaned binary with the original copy. Three foreign binaries are installed in the filesystem, all three ending in space to hide in plain sight:

- "/usr/bin/s2p “, the trojaned httpd.

- "/usr/sbin/arpd “, the original httpd.

- "/usr/sbin/tunelp “, touch2 to restore time of original apache.

sh-4.1# ls -la /usr/sbin/arp*

-rwxr-xr-x 1 root root 42704 Nov 23 21:26 /usr/sbin/arpd

-rwxr-xr-x 1 root root 1501046 Jan 29 17:37 /usr/sbin/arpd

sh-4.1# ls -la /usr/sbin/tunelp*

-rwxr-xr-x 1 root root 12536 Nov 24 18:58 /usr/sbin/tunelp

-rwxr-xr-x 1 root root 10648 Nov 24 18:58 /usr/sbin/tunelp

sh-4.1# ls -la /usr/bin/s2p*

-rwxr-xr-x 2 root root 53325 Nov 24 14:32 /usr/bin/s2p

-rwxr-xr-x 1 root root 1546321 Nov 24 14:32 /usr/bin/s2p

This made easy to find out the remote IP where the ssh connection was coming from, 41.77.114.49, located in Morocco. You probably want to add this IP to your firewall(s) rules 😄.

Next question: how is the sshd connection happening? I started by disabling the different authentication methods in sshd configuration. One curious detail is that if you disable PermitRootLogin the remote login will fail. Someone forgot to patch that in the sshd backdoor. SSHD logs didn’t reveal much about what was happening so the system logger or sshd were obviously patched. Enabling DEBUG3 mode in sshd logging only got this output:

Jan 31 17:51:31 sr71 sshd[240566]: debug3: fd 5 is not O_NONBLOCK

Jan 31 17:51:31 sr71 sshd[240566]: debug1: Forked child 240955.

Jan 31 17:51:31 sr71 sshd[240566]: debug3: send_rexec_state: entering fd = 8 config len 647

Jan 31 17:51:31 sr71 sshd[240566]: debug3: ssh_msg_send: type 0

Jan 31 17:51:31 sr71 sshd[240566]: debug3: send_rexec_state: done

Jan 31 17:51:31 sr71 sshd[240955]: debug3: oom_adjust_restore

Jan 31 17:51:31 sr71 sshd[240955]: Set /proc/self/oom_score_adj to -1000

Jan 31 17:51:31 sr71 sshd[240955]: debug1: rexec start in 5 out 5 newsock 5 pipe 7 sock 8

Jan 31 17:51:31 sr71 sshd[240955]: debug1: inetd sockets after dupping: 3, 3

Jan 31 17:51:31 sr71 sshd[240955]: Connection from 41.77.114.49 port 54399

After connection from line there was no logging related to this connection, which was obviously a strong indicator something was wrong with sshd or syslogd. The RPMs signatures appeared to be ok for both. Another web search revealed some clues about the potential sshd backdoor:

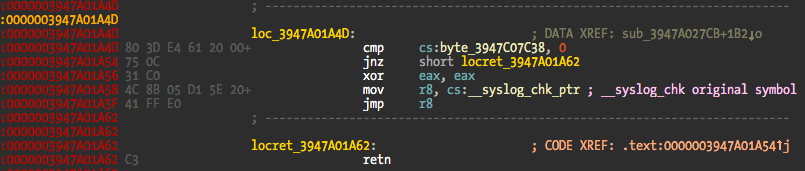

Again the mentioned clues could not be found in the library installed in the system, although its size was twice as big versus the version in a clean system. To find out if this was true, I attached GDB to sshd process and waited for the backdoor connection. I had a breakpoint in the logging function and traced it. Voilá, __syslog_chk was hooked and redirect into libkeysutils.so.1.3 library. This is an upgraded sshd rootkit version.

sh-4.1# ls -la /lib64/libkeyutils.so*

lrwxrwxrwx 1 root root 18 Jun 22 2012 /lib64/libkeyutils.so.1 -> libkeyutils.so.1.3

-rwxr-xr-x 1 root root 35320 Jun 22 2012 /lib64/libkeyutils.so.1.3

sh-4.1# rpm -qf /lib64/libkeyutils.so.1.3

keyutils-libs-1.4-4.el6.x86_64

sh-4.1# rpm -V keyutils-libs-1.4-4.el6.x86_64

sh-4.1#

This time the strings are obfuscated and function pointers are hijacked instead of the usual inline hooking. A sample list of hooked symbols from a memory dump:

0x7f55592508f0,No symbol matches $ptr.

0x7f55592508f8,No symbol matches $ptr.

0x7f5559250900,No symbol matches $ptr.

0x7f5559250908,connect in section .text of /lib64/libc.so.6

0x7f5559250910,No symbol matches $ptr.

0x7f5559250918,No symbol matches $ptr.

0x7f5559250920,No symbol matches $ptr.

0x7f5559250928,No symbol matches $ptr.

0x7f5559250930,__syslog_chk in section .text of /lib64/libc.so.6

0x7f5559250938,write in section .text of /lib64/libc.so.6

0x7f5559250940,No symbol matches $ptr.

0x7f5559250948,No symbol matches $ptr.

0x7f5559250950,No symbol matches $ptr.

0x7f5559250958,No symbol matches $ptr.

0x7f5559250960,No symbol matches $ptr.

0x7f5559250968,No symbol matches $ptr.

0x7f5559250970,MD5_Init in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250978,MD5_Update in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250980,MD5_Final in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250988,PEM_write_RSAPrivateKey in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250990,PEM_write_DSAPrivateKey in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250998,hosts_access in section .text of /lib64/libwrap.so.0

0x7f55592509a0,refuse in section .text of /lib64/libwrap.so.0

0x7f55592509a8,SHA1 in section .text of /usr/lib64/libcrypto.so.10

0x7f55592509b0,MD5 in section .text of /usr/lib64/libcrypto.so.10

0x7f55592509b8,sysconf in section .text of /lib64/libc.so.6

0x7f55592509c0,mprotect in section .text of /lib64/libc.so.6

0x7f55592509c8,waitpid in section .text of /lib64/libc.so.6

0x7f55592509d0,fork in section .text of /lib64/libc.so.6

0x7f55592509d8,exit in section .text of /lib64/libc.so.6

0x7f55592509e0,tmpfile@@GLIBC_2.2.5 in section .text of /lib64/libc.so.6

0x7f55592509e8,shmget in section .text of /lib64/libc.so.6

0x7f55592509f0,shmat in section .text of /lib64/libc.so.6

0x7f55592509f8,shmdt in section .text of /lib64/libc.so.6

0x7f5559250a00,dlinfo in section .text of /lib64/libdl.so.2

0x7f5559250a08,dlopen@@GLIBC_2.2.5 in section .text of /lib64/libdl.so.2

0x7f5559250a10,getsockname in section .text of /lib64/libc.so.6

0x7f5559250a18,socket in section .text of /lib64/libc.so.6

0x7f5559250a20,bind in section .text of /lib64/libc.so.6

0x7f5559250a28,getnameinfo in section .text of /lib64/libc.so.6

0x7f5559250a30,getpeername in section .text of /lib64/libc.so.6

0x7f5559250a38,gethostbyname in section .text of /lib64/libc.so.6

0x7f5559250a40,send in section .text of /lib64/libc.so.6

0x7f5559250a48,sleep in section .text of /lib64/libc.so.6

0x7f5559250a50,getenv in section .text of /lib64/libc.so.6

0x7f5559250a58,geteuid in section .text of /lib64/libc.so.6

0x7f5559250a60,inet_ntoa in section .text of /lib64/libc.so.6

0x7f5559250a68,ssignal in section .text of /lib64/libc.so.6

0x7f5559250a70,siglongjmp in section .text of /lib64/libc.so.6

0x7f5559250a78,__sigsetjmp in section .text of /lib64/libc.so.6

0x7f5559250a80,BIO_new_mem_buf in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250a88,BIO_free in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250a90,PEM_read_bio_RSAPublicKey in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250a98,RSA_public_decrypt in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250aa0,RSA_free in section .text of /usr/lib64/libcrypto.so.10

0x7f5559250aa8,__res_query in section .text of /lib64/libresolv.so.2

0x7f5559250ab0,__res_state in section .text of /lib64/libc.so.6

And a quick IDC script to deobfuscate the XOR’ed strings:

#include <idc.idc>

auto start, end;

auto xor1, xor2;

auto ea;

auto a, b;

start = 0x3947C07398;

end = 0x3947C07897;

xor1 = 0x253882E;

xor2 = 0x39FD3C83;

ea = start;

while (ea <= end)

{

PatchDWord(ea, Dword(ea) ^ xor1);

PatchDword(ea+4, Dword(ea+4) ^ xor2);

ea = ea + 8;

}

Message("End!\n");

The RSA public key found inside the library (used to login?):

-----BEGIN RSA PUBLIC KEY-----

MIGJAoGBAO9KdhaD9i6C8DdK4a1KFLwc7FvqdKPpw+qTZU2rMBFr1ZuSQavMdm++

K6yhjdEmI0k9e3g8GLGn62tFPMBKALCiakkAGcIFoHk+eyMGY6KEiZP4/st/PBFK

J7mBB0HOJHjMxZIrlgIGWEc8LzDWQK5m2/8gWvOBfNSmDprWKI49AgMBAAE=

-----END RSA PUBLIC KEY-----

The code for the syslog hook:

And a screenshot of the startup code of the library where it deobfuscates the XOR’ed strings and calls dlsym to solve the to be hooked symbols addresses:

The final question other than how the initial breach was made, is how the remote sshd connection is signaled. The backdoor tries to stay low profile by having a delay between the restart of legit httpd and the backdoor version. It is annoying when you try to test some hypothesis – you need to wait for the restart, which usually takes around 10 to 15 minutes. I haven’t reversed this part but my assumption is that httpd signals the remote system when it is started. This is because the backdoored library is also linked in httpd so it appears to me to be a good assumption. This is the reason why I initially assumed there was a twice an hour cron job.

This new version tries to fix some of the flaws of the previously found sample by encoding strings and keeping a clean httpd binary in the system. The problem is that it also leaves important clues when it tries to hide its tracks. The logging and deleted binaries are very good clues that something wrong is happening in the system, and good starting points to understand and track the trojaned binaries. Delayed operations are an effective old malware trick to delay and frustrate a bit the analysis.

I am leaving here all the binaries in case in want to reverse it and maybe find some additional interesting facts. I did not look up at the shared memory segment. It should be very similar to previous sample. I am not posting a copy of it because there might be some private information inside it.

This was a fun adventure! I stopped using Linux five years ago when I left my position at SIBS. Chasing rookits is as much fun as writing them.

I think this briefs most of the important details about this backdoor. I will update this post in case I missed something. The binaries are below so feel free to poke around.

Have fun,

fG!

Sample binaries:

HackingTeamRDorksA_samples.zip (Password is: infected!)